Easy To Get Started

ManiSkill2 is pip installable and directly runnable in Google Colab.

Starting from a clean Python environment, you can see a robot working in 1 minute.

Just copy & paste the commands below!

ManiSkill2 is pip installable and directly runnable in Google Colab.

Starting from a clean Python environment, you can see a robot working in 1 minute.

Just copy & paste the commands below!

pip install mani-skill2

python -m mani_skill2.utils.download_asset ycb

python -m mani_skill2.utils.download_asset minimal_bedroom

python -m mani_skill2.utils.download_demo PickSingleYCB-v0

python -m mani_skill2.trajectory.replay_trajectory --traj-path demos/v0/rigid_body/PickSingleYCB-v0/035_power_drill.h5 --vis --bg-name minimal_bedroom

* The right video is played at 2x speed.

ManiSkill2 features out-of-the-box tasks for 20 skills with 2,000+ objects.

You don't have to collect assets or design tasks by yourself, and can focus on algorithms!

We verify that all tasks are solvable,

and have collected 4,000,000+ frames of demonstrations.

You can immediately study learning-from-demonstration algorithms

without struggling with data collection!

ManiSkill2 provides blazingly fast visual RL environments using our asynchronized rendering system.

On a regular workstation, your robot can interact with the environment and collect millions of visual observations within just 10 min!

* Tested on a workstation with Intel I9-9960X and NVIDIA Titan RTX using the PickCube environment.

We provide 3D point cloud observations natively.

Try your favorite 3D learning algorithms and develop your own!

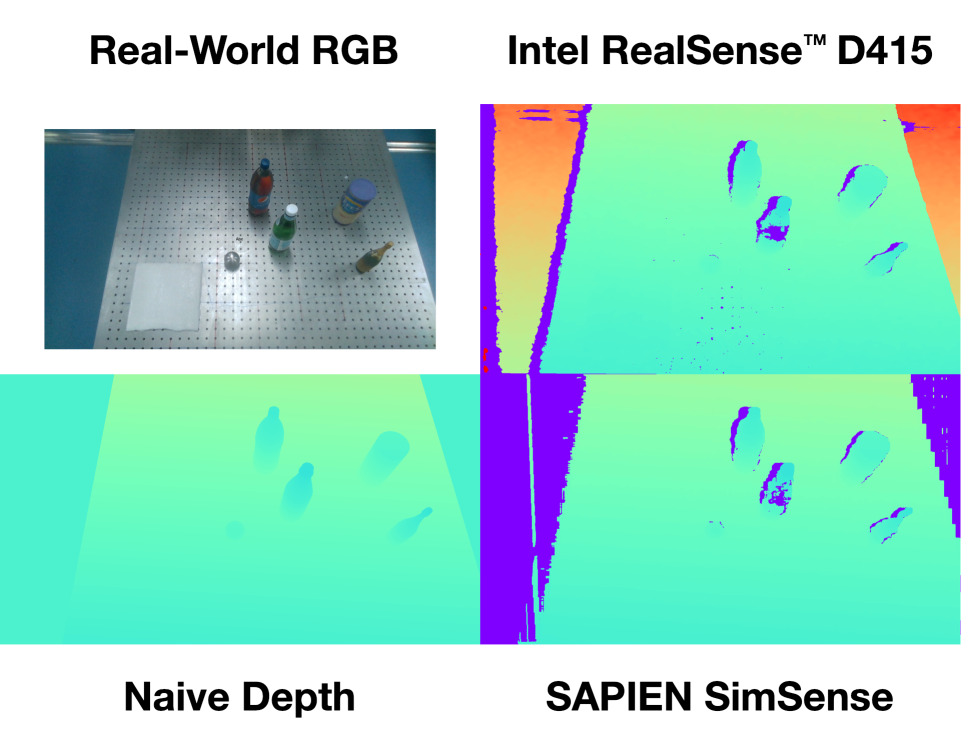

Our physics-grounded depth sensor simulator comes with realistic artifacts and is tested to have small sim2real domain gap.

Train your vision models in simulator and deploy in real world with limited additional efforts!

* See our recent T-RO paper "Close the Optical Sensing Domain Gap by Physics-Grounded Active Stereo Sensor Simulation".

We provide an interactive GUI to inspect and interact with the environments.

See what's going on and what's going wrong!

| ManiSkill2 | BEHAVIOR-1K | Habitat 2.0 | IssacGym | MetaWorld | ORBIT | Robosuite | Fast rendering | Large-scale demonstrations | Object variations | Visual baselines | pip installable | 3D point cloud support | Open-source engine | Hardware compatibility | Interactive GUI |

|---|

A step-by-step guide to help you get started with ManiSkill2. Try it now!

Quickstart

Reinforcement Learning

Imitation Learning

Advanced Rendering

Customize Environments

We provide tuned baselines (BC, PPO, DAPG). Our RL Library, ManiSkill2-Learn, has been optimized for point cloud based RL.

ManiSkill2 embraces a heterogeneous collection of out-of-the-box task families for 20 manipulation skills.

Distinct types of manipulation tasks are covered: stationary/mobile-base, single/dual-arm, rigid/soft-body.

* All scenes below are rendered in SAPIEN with ray tracing. However, the scenes cannot be released due to licenses.

@inproceedings{gu2023maniskill2,

title={ManiSkill2: A Unified Benchmark for Generalizable Manipulation Skills},

author={Gu, Jiayuan and Xiang, Fanbo and Li, Xuanlin and Ling, Zhan and Liu, Xiqiang and Mu, Tongzhou and Tang, Yihe and Tao, Stone and Wei, Xinyue and Yao, Yunchao and Yuan, Xiaodi and Xie, Pengwei and Huang, Zhiao and Chen, Rui and Su, Hao},

booktitle={International Conference on Learning Representations},

year={2023}

}